Top 20 Informatica Performance Tuning Tips

Saturday, May 21, 2016

/

No Comments

Here are a few points which will get you started with Informatica Power center Performance Tuning .Some of these tips are very general in nature please consult your project members before implementing them in your projects.

1) Optimize the Input Query if it’s Relational (e.g. Oracle table) source –

- Reduce no.of rows queried by using where conditions instead of Filter/Router transformations later in the mapping. Since you choose fewer rows to start with, your mapping will run faster

- Maker sure appropriate Indexes are defined on necessary columns and analyzed. Indexes must be especially defined on the columns in the ‘where’ clause of your input query.

- Eliminate columns that you do not need for your transformations

- Use ‘Hints’ as necessary.

- Use sort order based on need

Note: For # i results will vary based on how the table columns are Indexed/queried…etc.

2) Use the Filter transformation as close to the SQ Transformation as possible.

3) Use sorted input data for Aggregator or Joiner Transformation as necessary.

4) Eliminate un-used columns and redundant code in all the necessary transformations.

5) Use Local variables as necessary to improve the performance.

6) Reduce the amount of data caching in Aggregator.

7) Use parameterized input query and file for flexibility.

8) Changed memory related settings at workflow/session level as necessary.

9) When use multiple condition columns in Joiner/Lookup transformation make sure to

use numeric data type column as first condition.

10) Use persistent cache if possible in Lookup transformations.

11) Go through the sessions Logs CLOSELY to find out any issues and change accordingly

12) Use overwrite queries in Lookup transformation to reduce the amount of data cached.

13) Make sure the data type and sizes are consistent throughout the mapping as much

as possible.

14) For Target Loads use Bulk Load as and when possible.

15) For Target Loads use SQL * Load with DIRECT and UNRECOVERABLE option for large volume of data loads.

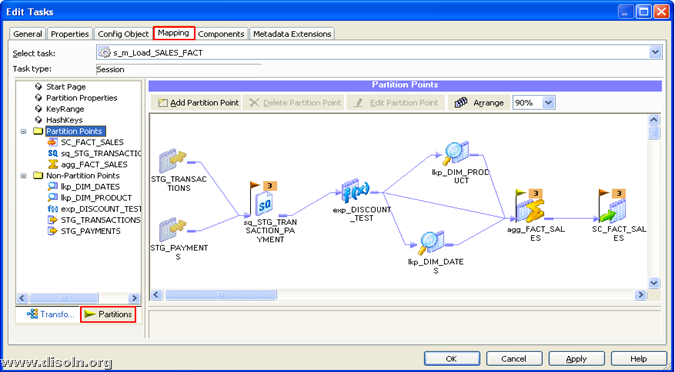

16) Use Partitioning options as and when possible. This is true for both Informatica and Oracle. For Oracle, a rule of thumb is to have around 10M rows per partition

17) Make sure that there are NO Indexes for all “Pre/Post DELETE SQLs” used in all

the mappings/Workflows.

18) Use datatype conversions where ever possible

- 1) Use ZIPCODE as Integer instead of Character this improves the speed of the lookup Transformation comparisons.

- 2) Use the port to port datatype conversions to improve the session performance.

19) Use operators instead of functions

e.g: For concatenation use “||” instead of CONCAT

20) Reduce the amount of data writing to logs for each transformation by setting

log settings to Terse as necessary only.

21) Use Re-usable transformations and Mapplets where ever possible.

22) In Joiners use less no.of rows as Master ports.

23) Perform joins in db rather than using Joiner transformation where ever possible.